TIP: For Storage admins and VMware adminsĬustomer admins use our VASA UI ( ) so they can instantly see association between datastores and LUNs and associated objects like hostgroups etc. Hopefully, google searches will grab this and avoid any more vCenter DB hacking and have customers continue to avail of benefits of Hitachi VASA Provider to enable efficient vVols and VMFS environments.

#DATASTORE USAGE ON DISK ALARM UPDATE#

I will provide update on what we provided them around automated UNMAP for VMFS 5 in subsequent blog and wisdom learned from vVols customers. # Determines the alarm level for a red alarm. # This value must be a value between 0 and 100. # Determines the alarm level for a yellow alarm.

#DATASTORE USAGE ON DISK ALARM FREE#

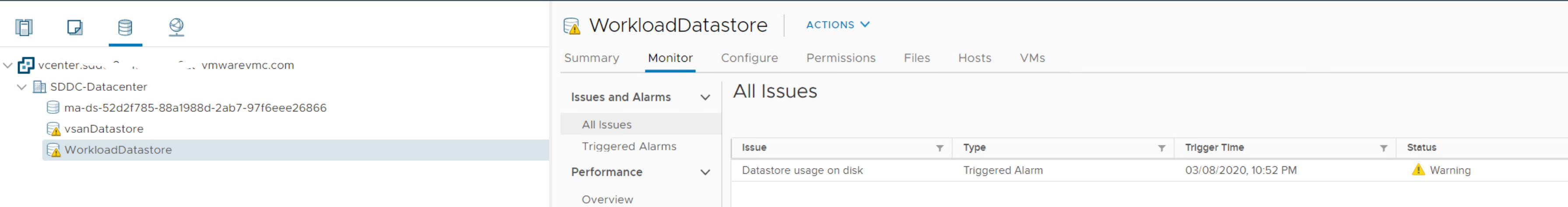

Highly recommended to run UNMAP service to free up disk space.Restart the VP service from VASA UI (or reboot VM).Change the default yellow (65) and red (80) alarms to desired values (suggest no larger than 90,95).Edit properties file, vi VasaProvider.properties.Create a backup of properties file, cp VasaProvider.properties.cd /usr/local/hitachivp-b/tomcat/webapps/VasaProvider/META-INF/.SSH into Hitachi VASA Provider appliance.Acknowledge and Reset to green existing alarms (on 6.7, go to Datastore/Monitor/Triggered Alarms).When you deploy Hitachi Storage (VASA) Provider into the environment, you get the option to change these threshold values. These thresholds are set by a VASA Provider. While I suspected that they had not run UNMAP operations very frequently, they did want to continue running at higher utilization level. Up to now, they were having to hack vCenter DB to temporarily clear the alerts and disable Hitachi VASA, so losing out on the value it provides for VMFS (automated tagging etc.) ,never mind a key block for enabling VMware vVols. I did 'gently' remind them that these type of daily/weekly datastore space management issues go away with vVols datastore with its logical container but we had to get them to happy spot first with their VMFS datastoresįortunately, the answer is simple (albeit) not well documented to address the VMFS alerts/alarms. Worse, depending on vSphere version/update, the latter alert (thin provisioning) would not allow them to deploy new VMs on those datastores with the dreaded “The operation is not allowed in the current state of the datastore”. Some of these customer’s run their VMFS datastores hot >80% and these alerts/alarms were triggering. Monitors whether the thin provisioning threshold on the storage array exceeds for volumes backing the datastore. As a VMware admin, you may be familiar with “ Datastore usage on disk ” and “ Thin-provisioned volume capacity threshold exceeded ” alerts Thin-provisioned volume capacity threshold exceeded Interestingly, this first issue was blocking their efforts to actually test VMware vSphere vVols on Hitachi VSP Storage. So first to the alarms/alerts on VMFS datastore usage. I'll also share some insights on how customers are dynamically reclaiming storage as they expand their vVols footprint in a part 4.

They were evaluating/engineering an architecture around VMware vSphere vVols but wanted to get their existing VMFS datastores spring-cleaned. In this part 1, I’ll address vCenter datastore alarms/alerts they were dealing with and in part 2, I’ll address the steps required if you want to verify automatic unmap for VMFS6 datastores down to unmap I/Os and in part 3, what we provided to enable them with some UNMAP powercli automation for their existing VMFS 5 datastores. I recently encountered a cluster of customers who were dealing with datastore space management issues.

0 kommentar(er)

0 kommentar(er)